One of the overarching themes for Data Science at Doma is generalizability. This is very important for the successful implementation of our machine learning models in our products due to the wide variety of sources of our data as well as supporting quick onboarding of new customers (for instance the cold start problem). As we march towards improved model performance, false positives can be a major obstacle. In simple terms, false positives arise in a machine learning model prediction when the calculation determines that an instance contains features that cause the model to confuse it for something that the instance is not. A simple example would be a cat confused for a dog. Both are four-legged furry creatures and so it may be forgivable for a mathematical algorithm not to recognize the difference. However, given that each individual order at Doma needs to process documents that can contain up to 100 pages or so, we must ensure that our error rates are low.

In this blog post, I will focus on how to reduce the confusion that produces false positives in computer vision models using data augmentation. Recently we independently discovered that the so-called ‘copy-paste’ technique of data augmentation is highly effective for boosting the performance of computer vision models trained on smaller datasets. The copy-paste technique augments the data set by generating additional training data via copying segments of the image corresponding to specific objects to be detected or recognized and pasting these onto other images (see below for more details). At nearly the same time as our discovery, an article about the copy-paste technique was recently posted on arxiv researchers at Google Brain [1]. The paper on arxiv focused mainly on overall model performance in an empirical sense. Here I will focus on using the technique to specifically reduce the false positive rate due to easily confused classes.

Building the Dataset

As a demonstration of this technique, we build a small dataset from the Common Objects in Context (COCO) [2] open-source data set: https://cocodataset.org. This is a very large industry-standard data set used to benchmark several computer vision tasks. For the current use case, the focus will be on object detection for classes that are easily confused. As a specific example, I will focus on bicycles versus motorcycles. These objects are clearly similar from a visual perspective: vehicles with two wheels which often are found in similar environments and ofter with one or two people riding on top.

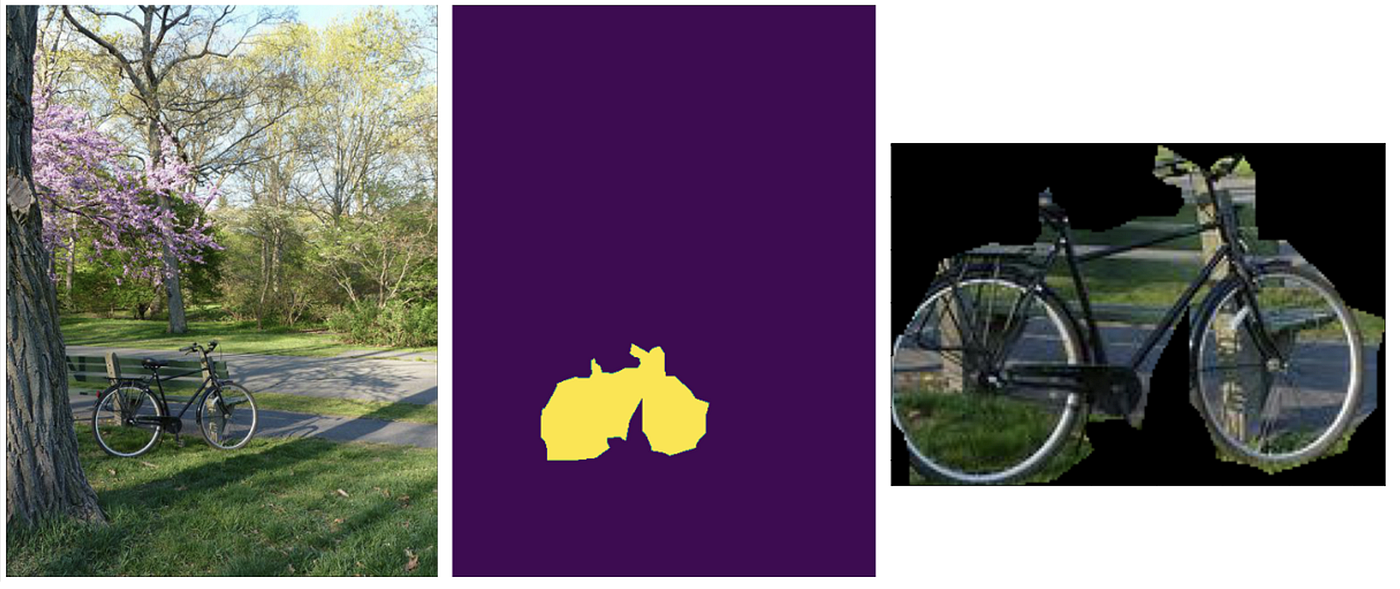

We create a single training dataset consisting of 200 images of bicycles including the bounding box annotations. However, we will leverage the mask annotations (which are polygons that indicate which pixels in the image contain the object of interest) that are also included in the COCO dataset for performing the copy-paste technique. To understand how the copy-paste data augmentation can decrease the model confusion, we will create two additional training sets that are augmented with images using the copy-paste technique, but with two different types of background images. This will allow us to explore how choices in the copy-paste technique can affect the overall performance.

Using the copy-paste method

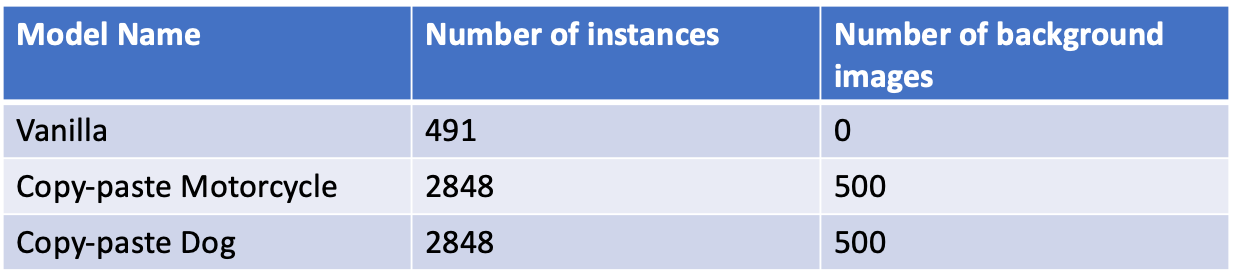

We can build up an augmented data set of bicycles but taking advantage of the masks provided by COCO. First, we chose 200 annotated images of bicycles, which were filtered using COCO’s tools to be sure no motorcycles existed in these images. Next using the masks supplied by the COCO annotations for each image, we can locate all the pixels belonging to each bicycle. Taking advantage of this we can produce cut images of each bicycle for each image. There was a total of 491 bicycle instances in the 200 image dataset that was chosen. Next, we chose 500 images that contained motorcycles but were filtered to exclude bicycles.

Our data augmentation process took each of the 491 bicycle instances from the 200 image data set that we selected from COCO and pasted each instance individually onto up to five different images that contained motorcycles and no bicycles. For those interested to learn more about how to manipulate images with python see: https://automatetheboringstuff.com/chapter17/. Figure 2 shows a couple of examples of the result of the process. Because some motorcycle images were smaller than the copied bicycle image, this process occasionally failed. One workaround would be to reduce the bicycle size. However, instead we chose to simply ignore these relatively rare cases.

Each iteration yields one extra image and one extra bicycle instance. After processing 500 motorcycle images, the copy-pasted augmented dataset had 2557 images with 2848 instances of bicycles.

Experimental Details

Regional Convolutional Neural Network (RCNN)

The regional convolutional neural network was originally introduced back in 2014 [3]. Since then there have been many iterations, and many current state of the art object detection and instance segmentation models are based on this architecture. This makes RCNNs a good choice to demonstrate how the copy paste data augmentation method can be used to reduce false positives for object detection. In the work here use the faster RCNN method [4].

For a high level introduction to RCNNs, I recommend starting at the first of a series blog posts which gives a tour of the development from the original RCNN to faster RCNN. For development we built upon the detectron2 framework.

Training

We trained three different Faster RCNNs. One was trained on 200 images of bicycles without the copy-paste data augmentation. During training, we did a simple augmentation technique of random horizontal flipping only. The increases the effective training dataset size to 400 images, which also doubles the number of bicycle instances from 491 to 982. From here on we will refer to this as the vanilla model.

A second Faster RCNN model was trained on the same 400 images but we also included the augmented images produced by copy-pasting the bicycles on images of motorcyles. Random flipping was also allowed, which resulted in a total number of bicycle instances to be 5696. However, one must bear in mind these aren’t unique in the sense of the vanilla model. This model will be referred to as the copy-paste motorcycle model.

Finally, a third Faster RCNN model was trained in exactly the same way as the copy-paste motorcycle model with one difference. Instead of using background images that motorcycles to paste the bicycles upon, the background images were those of dogs that had no bicycles.

For a fair comparison, all models were trained for 5000 iterations with a batch size of 2. In the next section we display results from these models. For a summary of these three models, please see table 1 below:

Evaluation

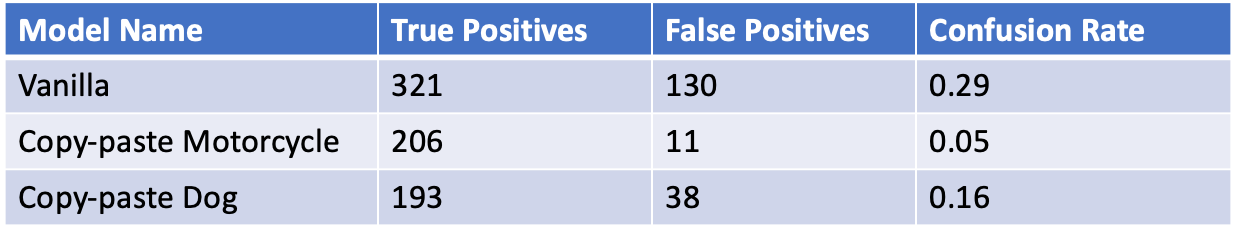

To test out how much each model confused the motorcycle class for the bicycle class, evaluation was performed on 200 images that contained both bicycles and motorcycles. The total number of bicycle instances in this data set was 965. As expected, the vanilla model confused a large number of motorcycles for bicycles whereas the copy-paste model rarely predicted a motorcycle to be bicycle

For the purpose of visual inspection, we display five pairs of results where the vanilla model confused very clear motorcycles for bicycles.

Overall the number of correctly detected bicycles (true positives) by the vanilla model was 321 and the number of motorcycles misidentified as bicycles (false positives) was 130. For the copy-paste motorcycle model, 206 bicycles were correctly identified and 11 motorcycles were misidentified as bicycles. Note that we are not considering any false positives for classes that were not actually motorcycles for both models. Essentially, we are looking at the confusion rate for motorcycles to be labeled as bicycles. In these terms, the confusion rate for the copy-paste model was only 0.05 where as for the vanilla model it was much higher at 0.29. Of course the smaller the confusion, the better. The copy-paste dog model had a reduced false positive rate as well — it only falsely identified 38 motorcycles as bicycles. However the number of true positives was the smallest of the three models at only 193. These results are summarized below in table 2:

Final Thoughts

Our demonstration of the copy-paste technique for shows that this augmentation does indeed reduce the confusion between bicycles and motorcycles and shows that this is a promising technique to apply to other cases where classes are easily confused. The cost of performing this technique is the extra work of building an additional dataset of images of classes (here motorcycles) that the model is expected to confuse with the class to be detected (here bicycles). However, this additional dataset does not require any annotations and therefore is relatively cheap to build.

We can also see that the reduction of confusion by the copy-paste augmentation technique can depend strongly on the choice of images upon which the instances are pasted. In the case of pasting the bicycles on images of only motorcycles or only dogs, we find that for the former the model has more true positives and less false positives — both of which are desirable. When pasting upon dogs, the number of false positives does decrease significantly compared to the vanilla model, just not as much as the copy-paste motorcycle model. In fact, the confusion rate for the copy-paste dog model was over 3 times higher than for the copy-paste motorcycle model.

Finally, as noted above, the annotated masks for the COCO dataset are not as clean as one might wish. If a dataset with more precise masks were used, it is expected that the copy-paste technique would give even better results as the model would likely learn less about the idiosyncrasies inherent in the annotation method and more so on the features of the actual objects to be detected. However, in spite of this, our experiment shows that the copy-paste technique can substantially reduce the false positive rate.

References:

[1] G. Ghiasi, Y. Cui, A. Srinivas, R. Qian, T. Lin, E.D. Cubuk, Q. V. Le, and B. Zoph, Simple Copy-Paste is a Strong Data Augmentation Method for Instance Segmentation (2020), arxiv.org:2012.07177v2 [cs.CV]

[2] T.-Y. Lin, M. Maire, S. Belongie, L. Bourdev, R. Girshick, J. Hays, P. Perona, D.Ramanan, C. L. Zitnick, and P. Dollár, . Microsoft COCO: Common Objects in Context (2014), arxiv:1405.0312 [cs.CV]

[3] R. Girshick, J. Donahue, T. Darrell , and M. Jitendra, Rich feature hierarchies for accurate object detection and semantic segmentation (2014), arxiv:1311.2524 [cs.CV]

[4] S. Ren, K. He, R. Girshick, and J. Sun, Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks in Advances in Neural Information Processing Systems 28 (NIPS 2015) (2015)

[5] Y. Wu and A. Kirillov, F. Massa, W. Lo and R, Girshick, Detectron2 (2019) https://github.com/facebookresearch/detectron2